Machine learning is rapidly becoming a defining feature of the Internet of Things (IoT) device. Appliances now support voice-driven interfaces and can intelligently respond to natural language patterns. Now, by demonstrating a process to a robot on a smart phone camera, you can show robots how to move materials around on the factory floor and program other machines, and the functions of smart phones become smarter. These applications make use of the most successful, complex, and data-oriented artificial intelligence architectures: deep neural networks (DNNs).

So far, the application of DNN technology as an embedded system has always been the expectation of computer performance. Although the amount of computation is less than during training, there must still be billions of computational flow data per second, such as voice and video, during the inference phase of the input data becoming trained to identify and analyze DNNs. Therefore, in many cases, the process will be shifted to a cloud that can provide a lot of power, but there is still no ideal solution for edge devices.

Mission-critical use cases, such as self-driving vehicles and industrial robots, use DNN's instant recognition of object capabilities and improve situational awareness. However, the issues of latency, bandwidth, and network availability are not suitable for cloud computing. In these situations, the performers cannot afford the risk of the cloud not being able to respond instantly.

Privacy is another issue. Although consumers appreciate the convenience of voice assistance such as smart speakers in their devices, they are also increasingly concerned that when regularly transferring the recordings of their conversational content to the cloud, their personal capital may be inadvertently disclosed. With the emergence of smart speakers equipped with camera lenses and robot assistants that can enable video, this concern has increased. In order to eliminate doubts from customers, manufacturers are looking at how to move more DNN processing to the edge in the final device. The main problem is that DNN processing is not suitable for the structure of traditional embedded systems.

Traditional embedded processors are not enough to handle DNN processing

CPUs and GPU-based traditional embedded processors cannot efficiently handle DNN workloads for low-power devices. IoT and mobile devices have very strict limits on power and area, and must have high performance to perform real-time DNN processing. The combination of power, efficiency, and area is called PPA and must be optimized for the task at hand.

One way to solve these problems is to provide a hardware engine that can access the on-chip temporary memory for DNN processing. The problem with this approach is that developers must have a high degree of flexibility, and the structure of each DNN implementation needs to be adapted to its target application. DNNs designed and trained specifically for language recognition will mix convolutions, pooling, and fully concatenated layers that are different from film-specific DNNs. As machine learning is still immature and continues to grow, flexibility is crucial to designing solutions that can respond to future solutions.

Another common method is to add a vector processing unit (VPU) to the standard processing unit. This approach can plan more efficiently and have the flexibility to handle different types of networks, but this is not enough. Reading data from an external DDR memory is related to DNN processing and is extremely power-consuming. Therefore, data efficiency and memory access must also be carefully examined as a complete solution. To enhance efficiency, scalability, and flexibility, the VPU is just one of the necessary important modules in the AI ​​processor.

Enables optimized bandwidth and throughput

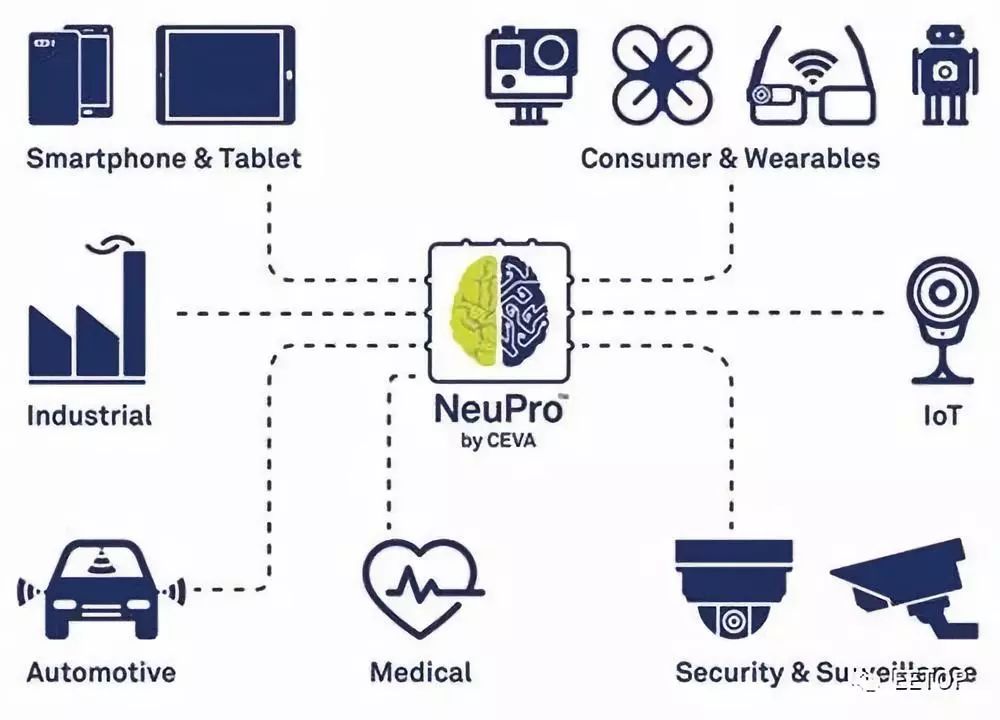

For example, CEVA has established a structure that can simultaneously meet the DNN's performance challenges and retain the flexibility necessary to handle the widest variety of built-in deep learning applications. The NeuPro AI processor includes a professional, optimized deep neural network inference hardware engine to handle convolutions, full connectivity, activation and pooling layers, and an efficient, programmable VPU for unsupported Other layers and inference software implementation. This architecture is paired with the CEVA Deep Neural Network (CDNN) software architecture that enables optimized drawing compilation and execution at runtime.

NeuPro's scalable and flexible structure for a variety of different AI applications

By supporting 8-bit and 16-bit arithmetic, further performance optimization can be achieved. For some operations, there must be accurate 16-bit calculations, while in other cases, using 8-bit calculations can achieve almost the same results, which can significantly reduce the workload, and thus reduce power consumption. The NeuPro engine balances these blending operations so that each layer achieves optimal execution.

Select 8-bit or 16-bit calculations based on different layers to provide the best accuracy and performance

Combining optimized hardware modules, VPUs, and efficient memory systems, it can provide scalable, flexible, and highly efficient solutions. In addition, CDNN can also use key network conversion and off-the-shelf library modules to simplify development. The final result is a fully functional and IoT device designer with the ability to fully utilize localized machine learning AI processors in the next generation of products.

Graphic overlays are thin films printed as the top layer of a product.

They apply printed graphics and text to label controls to provide instructions or safety warnings while serving one or two purposes: a decorative layer or a functional layer.

China Graphic Overlays, Flexible Membrane Switch Graphic Overlay manufacturer, choose the high-quality Membrane Switches Graphic Overlays, Graphic Overlay Membrane Switch Module, etc.

Graphic Overlays,Flexible Membrane Switch Graphic Overlay,Membrane Switches Graphic Overlays,Graphic Overlay Membrane Switch Module

KEDA MEMBRANE TECHNOLOGY CO., LTD , https://www.kedamembrane.com