An understanding of the open source data set for semantic segmentation of autonomous driving in cities may help engineers how to train autopilot models.

In the last 10 years we have put a lot of effort into the creation of semantically segmented data sets and the promotion of algorithms. Recently thanks to the development of deep learning theory, we have made a lot of progress in the subfields of visual scene understanding. The disadvantage of deep learning is that it requires a large amount of annotation data. Here we have compiled a set of widely used datasets for urban semantic segmentation, hoping to provide references for the field of automated driving.

This is our first article in a series on how to provide semantically segmented datasets for automated driving. Semantic segmentation labeling tasks mainly refer to marking the objects in the image separately according to the class at the pixel level. These classes may be pedestrians, vehicles, buildings, the sky, vegetation, and so on. For example, Semantic Segmentation can help SDCs (Autonomous Vehicles) identify the areas that can be driven in a picture.

data set

| CamVid | 2007 | 32 | 700 | Cambridge | day |

| KITTI | 2012 | N/A | N/A | Kalsruhe | day |

| DUS | 2013 | 5 | 500 | Heidelberg | day |

| CityScapes | 2016 | 30 | 5000 (+20000) | Germany, Switzerland, France | Climate (Spring / Summer / Autumn) |

| Vistas | 2017 | 66 | 25000 | United States, Europe, Africa, Asia Oceania | Sunny, rain, snow, fog dusk, daytime, evening |

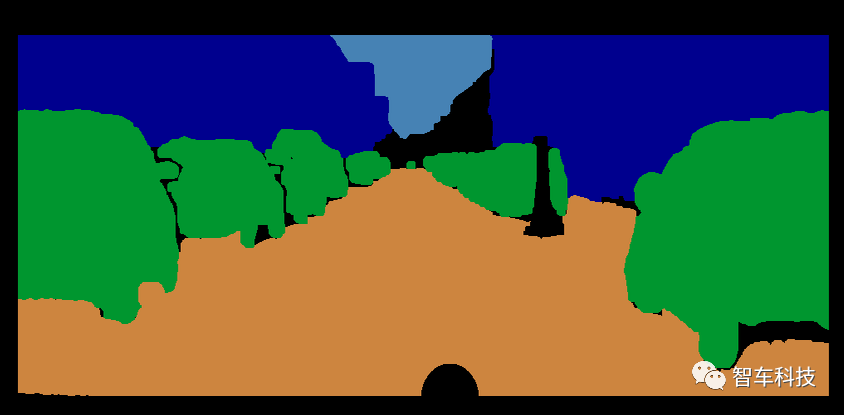

CAMVID

CamVid (960x720px)

This is the earliest semantic segmentation dataset used in the autopilot field and was released at the end of 2007. They applied their own image tagging software to annotate 700 pictures in a series of 10 minutes of video. These videos were taken by the cameras installed in the car dashboard, and the shooting perspective was basically the same as the driver's perspective.

KITTI

KITTI Dataset (1242*375px)

The KITTI dataset was published in 2012, but they did not initially mark semantically segmented images, but were later labeled by another team. However, this data set does not include the annotation of the road. This small data set was taken from a series of sensors installed on the top of the car, including grayscale sensors, color cameras, radar scanners and GPS/IMU units.

DUS

DUS Dataset (1024*440px)

This dataset includes 5000 grayscale images, of which only 500 semantically segmented images have been annotated. Unlike other datasets, it does not include the "natural" classification. Because of its small size, it is suitable for testing the performance of semantic segmentation models.

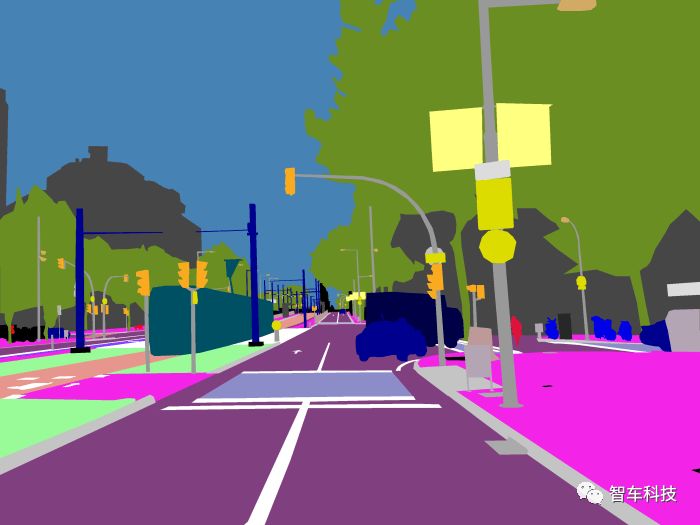

CityScapes

Cityscapes Dataset (2048*1024px)

It is an expanded version of the DUS dataset, recorded in more terrain and weather conditions to get more of the changing cityscape. This data set also includes a lot of rough pictures to improve the performance of a large number of weakly labeled data. Like the DUS, the camera is mounted behind the windshield. The 30 categories in the picture are also divided into 8 categories. One feature of this dataset is that it provides more than 20,000 rough-cut pictures. Many deep learning techniques apply this traditional data set to improve their IoU score.

The most recent model is generally more than 80% IoU. The link contains their scoring system and implementation guidelines.

Mapillary

Mapillary Vistas Dataset

Is a street view image platform, platform registered users can work together to make better maps. They have opened partial image datasets and annotated them with pixel-level accuracy. At the time of this writing, it was the largest open source dataset in the world to do diversification, spanning the continents.

Because the pictures on this platform are collected by the public, there are many types of pictures.

Lcd Integrated Display,Lcd Panel Indoor Display,Good Angle Lcd Segment Display,Va Lcd Panel Display

Wuxi Ark Technology Electronic Co.,Ltd. , https://www.arkledcn.com