Lei Feng Network (Search "Lei Feng Net" public concern) : Translator Qu Xiaofeng, Hong Kong Polytechnic University Human Biometrics Research Center doctoral students. This article was originally contained in the WeChat public account.

Some people say that artificial intelligence (AI) is the future, artificial intelligence is science fiction, and artificial intelligence is also part of our daily lives. These assessments can be said to be correct, depending on which type of artificial intelligence you are referring to.

Earlier this year, Google's DeepMind's AlphaGo defeated South Korea's Go Master, Sebastian Kouda. When the media described DeepMind's victory, artificial intelligence (AI), machine learning, and deep learning were all used. All three played a role in AlphaGo's defeat of Li Shizhen, but they did not say the same thing.

Today we use the simplest method—concentric circles—to visually show the relationship and application of the three of them.

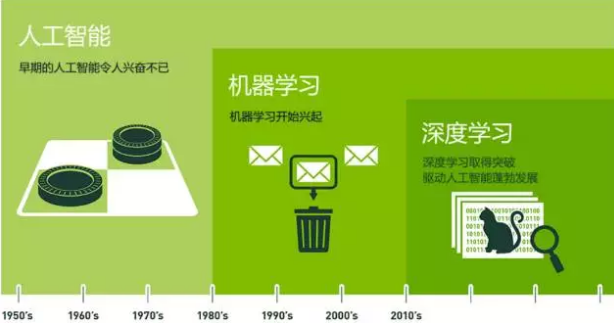

As shown above, artificial intelligence is the earliest and largest concentric circle on the outside; followed by machine learning, a little later; the innermost is deep learning, the core driver of today's artificial intelligence explosion.

In the fifties, artificial intelligence was once greatly favored. Later, some smaller subsets of artificial intelligence developed. First machine learning, then deep learning. Deep learning is again a subset of machine learning. Deep learning has caused an unprecedented huge impact.

| From conception to prosperity

In 1956, several computer scientists met at the Dartmouth Conference and proposed the concept of "artificial intelligence." Since then, artificial intelligence has been haunting people's minds and slowly hatching in scientific research laboratories. In the following decades, artificial intelligence has been reversing at both poles, or it has been called the prophecies of the future of human civilization and dazzling; or it has been thrown into the garbage dump by the madness of a technological madman. Frankly, these two sounds still existed until 2012.

In the past few years, especially since 2015, artificial intelligence has begun to burst. A large part is due to the widespread use of GPUs, making parallel computing faster, cheaper, and more efficient. Of course, the unlimited expansion of storage capacity and the combination of sudden bursts of data torrents (big data) have also led to the massive explosion of image data, text data, transaction data, and mapping data.

Let's take a hard look at how computer scientists have developed artificial intelligence from the earliest point of view to supporting applications that are used by hundreds of millions of users each day.

| Artificial Intelligence - Empowering people with their intelligence

As early as the summer of 1956, the pioneers of artificial intelligence dreamed of using the computers that had just emerged to construct complex machines with the same essential characteristics as human wisdom. This is what we now call "strong AI" (General AI). This omnipotent machine, it has all our perceptions (even more than human beings), all our rationality, can think like us.

People always see such machines in movies: friendly, like C-3PO in Star Wars; evil, such as the Terminator. Strong artificial intelligence is still only present in movies and science fiction. The reason is not difficult to understand. We can't achieve them yet, at least for now.

We are able to achieve, generally known as "weak AI" (Narrow AI). Weak artificial intelligence is a technology that can perform the same task as people and even better than people. For example, image classification on Pinterest; or face recognition on Facebook.

These are examples of weak artificial intelligence in practice. These technologies implement some specific parts of human intelligence. But how are they achieved? Where does this intelligence come from? This brings us to the inner layer of the concentric circle, machine learning.

| Machine Learning - An Approach to Artificial Intelligence

The most basic approach to machine learning is to use algorithms to parse data, learn from it, and then make decisions and predict events in the real world. Unlike traditional, hard-coded software programs that solve specific tasks, machine learning uses a lot of data to "train" and learn from data about how to accomplish tasks.

Machine learning comes directly from the field of early artificial intelligence. Traditional algorithms include decision tree learning, derivation logic planning, clustering, reinforcement learning, Bayesian networks, and so on. As we all know, we have not yet implemented strong artificial intelligence. Early machine learning methods did not even achieve weak artificial intelligence.

The most successful application area for machine learning is computer vision , although it still requires a lot of manual coding to do the job. People need to manually write classifiers and edge detection filters so that the program can recognize where the object starts and where it ends; write a shape detection program to determine if the detection target has eight edges; write a classifier to recognize the letters “ST-OP†". Using these hand-written classifiers, people can finally develop algorithms to perceive the image and determine if the image is a stop sign.

This result is not bad, but it is not the kind of success that can be lifted. Especially when it comes to clouds and clouds, the signboard becomes less visible and visible, or it is blocked by a tree, and the algorithm is difficult to succeed. This is why some time ago, the performance of computer vision has not been able to approach human capabilities. It is too rigid and too susceptible to environmental conditions.

As time progresses, the development of learning algorithms changes everything.

| Deep learning - an implementation of machine learning technology

Artificial Neural Networks is an important algorithm in early machine learning and has been going through many decades of ups and downs. The principle of neural networks is inspired by the neurons of our brain's physiology, which are interconnected with each other. But unlike any neuron in the brain where a neuron can connect within a certain distance, artificial neural networks have discrete layers, connections, and data propagation directions .

For example, we can divide an image into image blocks and input it into the first layer of a neural network. Each neuron in the first layer passes data to the second layer. The second level neuron also accomplishes similar tasks, passing the data to the third level, and so on, until the last level, and then generating the result.

Each neuron assigns weight to its input. The correctness of this weight is directly related to the task it performs. The final output is determined by the sum of these weights.

We still use the Stop sign as an example. All elements of a stop sign image are shattered and then “checked†with neurons: octagonal shape, fire truck-like red color, sharply highlighted letters, typical size of traffic sign, and motionless motion Features and more. The task of the neural network is to give a conclusion as to whether it is a stop sign. The neural network will give a well-thought-out guess based on the weight of ownership - "probability vector".

In this example, the system may give such a result: 86% may be a stop sign; 7% may be a speed limit sign; 5% may be a kite hung on the tree and so on. Then the network structure informs the neural network that its conclusion is correct.

Even this example is considered to be more advanced. Until recently, neural networks were still forgotten by artificial intelligence circles. In fact, early in the emergence of artificial intelligence, neural networks have already existed, but the neural network's contribution to "intelligence" is negligible. The main problem is that even the most basic neural networks require a lot of calculations. The computational requirements of neural network algorithms are difficult to satisfy.

However, there are still some devout research teams, represented by Geoffrey Hinton of the University of Toronto, who insisted on research and realized the operation and proof of concept of parallel algorithms with the goal of super-computing. But until the GPU was widely used, these efforts only saw results.

Let's go back to the example of this stop sign recognition. Neural networks are modulated and trained, and they are often error-prone. What it needs most is training . Hundreds or even millions of images are needed to train until the input weights of the neurons are all very accurately modulated. Whether there is fog, sunny weather, or rain, correct results are obtained every time.

Only at this time can we say that the neural network has successfully learned the way to a stop sign; or in the Facebook application, the neural network learns from your mother's face; or, in 2012, Professor Andrew Ng was Google has achieved the neural network learning how the cat looks and so on.

Professor Wu’s breakthrough is to significantly increase these neural networks from the foundation. The number of layers is very large, and there are also many neurons. Then input massive data into the system to train the network. In Professor Wu, the data is the image of 10 million YouTube videos. Professor Wu added "deep" for deep learning. The "depth" here means a number of layers in a neural network .

Now, image recognition after deep learning training can be even better than humans in some scenes: from identifying cats to identifying early components of cancer in blood to identifying tumors in MRI. Google’s AlphaGo first learned how to play Go and then train with it on its own. Its way of training its own neural network is to constantly play chess with itself, repeatedly and underground, and never stop.

| Learning Deeper, Giving Artificial Intelligence a Bright Future

Deep learning enables machine learning to implement a wide range of applications and extends the realm of artificial intelligence. Depth learning has accomplished various tasks, making it seem that all machine aid functions have become possible. Driverless cars, preventive health care, and even better movie recommendations are all in sight, or soon to be realized.

Artificial intelligence is now, tomorrow. With deep learning, artificial intelligence can even reach our imagining science fiction. Your C-3PO I took away, you have your Terminator just fine.

Lei Feng network Note: This article is the original text from NVIDIA official website, author Michael Copeland, Long-time Tech reporters. A micro-channel public number entrepreneurship authorized to release the door Lei Feng network, reproduced, please contact an authorized source and author and reserved, not shorten it.

KNL1-63 Residual Current Circuit Breaker

KNL1-63 Moulded Case Circuit Breaker is MCCB , How to select good Molded Case Circuit Breaker suppliers? Korlen electric is your first choice. All moulded Case Circuit Breakers pass the CE.CB.SEMKO.SIRIM etc. Certificates.

Moulded Case Circuit Breaker /MCCB can be used to distribute electric power and protect power equipment against overload and short-current, and can change the circuit and start motor infrequently. The application of Moulded Case Circuit Breaker /MCCB is industrial.

Korlen electric also provide Miniature Circuit Breaker /MCB. Residual Current Circuit Breaker /RCCB. RCBO. Led light and so on .

KNL1-63 Molded Case Circuit Breaker,KNL1-63 China Size Molded Case Circuit Breaker,KNL1-63 Electrical Molded Case Circuit Breaker,KNL1-63 Automatic Molded Case Circuit Breaker

Wenzhou Korlen Electric Appliances Co., Ltd. , https://www.korlenelectric.com