Habitually machine vision is defined as: electronic imaging for inspection, process control, and auto-navigation. In machine vision applications, computers (not humans) use imaging techniques to capture images as input for the purpose of extracting and delivering information. According to Memes Consulting, advanced digital imaging technology is required for advanced industrial applications, advanced driver assistance systems (ADAS), augmented reality and virtual reality (AR/VR) technology, and machine vision capabilities for smart security systems. This technology allows machine vision to "see" sharper and farther in low light or no light conditions, without the need for visible light sources, so it does not interfere with normal human activities. From past experience, machine vision technology relies on a large number of light sources to capture images. Available sources include fluorescence, quartz halogen, LEDs, metal halides (mercury), and helium. When one of the light sources is used alone, it takes a lot of power and the resulting image quality is poor. In this way, these light sources do not meet the needs of applications beyond the scope of traditional industrial applications. AR/VR, security systems, and ADAS driving monitoring use eye tracking, face recognition, gesture control, and face recognition, as well as integrated ADAS surround-view cameras with night vision, but these applications To achieve the desired results, illumination outside the visible spectrum is required. In the past few years, advances in digital near-infrared (NIR) imaging technology have revolutionized machine vision and night vision capabilities. Why is NIR a necessary condition for current machine vision applications? NIR is used to illuminate objects or scenes outside the visible spectrum and allows the camera to "see" in low or no light conditions beyond human visual capabilities. Although low-level LEDs still need to increase NIR in some applications, NIR requires very little power and hardly interferes with users. In applications such as AR/VR or driving monitoring systems, these features of NIR are very important for accurate eye tracking and gesture control. In the case of security camera applications, NIR can monitor an intruder without the knowledge of the intruder. In addition, NIR produces more photons than visible light in night vision, making it an ideal choice for night vision applications. For example, let's compare the advantages and disadvantages of the two methods under night vision conditions in the ADAS system. One of the car manufacturers used a passive far-infrared (FIR) system that records images based on the heat of the object and displays them as bright negatives. Although the effective range that can be detected is as high as 980 feet, the image produced is not clear because it is recorded depending on the amount of heat emitted by the object. Another automaker uses NIR technology, which produces sharp, clear images in the dark, just like shooting with a high beam. The image can be captured regardless of the temperature of the object. However, the NIR system has a maximum effective detection range of 600 feet. Limitations of NIR In most cases, NIR is significantly improved over other alternatives, but using it is not without challenges. The effective range of the NIR imaging system is directly related to its sensitivity. Under the best conditions, the current NIR sensor structure can achieve a sensitivity of ≤ 800 nm. If the sensitivity of the NIR imaging system can be increased to 850 nm or higher, then the effective distance can be further expanded. The effective distance of NIR optical imaging is determined by two key measurement parameters: quantum efficiency (QE) and modulation transfer function (MTF). The QE of an imager represents the ratio of photons it captures to photons that are converted to electrons. The higher the QE, the farther the NIR illumination distance is and the brighter the image. 100% QE means that all captured photons are converted to electrons, enabling the brightest possible image. But at present, even the best NIR sensor technology only achieves 58% QE. MTF is a measure of the ability of an image sensor to transfer contrast from an object to an image at a specific resolution. The higher the MTF, the clearer the image. The MTF is affected by the noise of the electronic signal that jumps out of the pixel. Therefore, in order to maintain a stable MTF and achieve a clear image, the electrons need to remain in the pixel at all times.

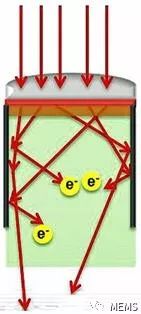

Figure 1. This simulated image shows a clear difference between low MTF and high MTF. Challenges of existing solutions When NIR is used in invisible conditions, the wavelength of NIR increases. The result is that the QE of silicon is reduced and the conversion efficiency of photons in the crystal is reduced. So to produce the same amount of photons, you need thicker silicon. Therefore, the traditional way to increase QE is to use thick silicon. The use of thick silicon increases the chance of photon absorption compared to thin silicon, providing higher QE and enhanced signal strength. In the case of a single-pixel detector, the use of thick silicon increases the QE of NIR to over 90%. However, if the application requires smaller pixels and continues to increase the silicon thickness, when the thickness of the silicon is increased to 100 μm, photons are caused to jump into adjacent pixels, causing crosstalk, which in turn reduces the MTF. As a result, although the image sensor is more sensitive to NIR illumination, its resolution is lower and the resulting image is brighter and blurry. One way to solve this problem is to use a deep trench isolation (DTI) technique to create a barrier between pixels. Although standard DTI has been proven to improve MTF, it can also create defects that destroy dark areas of the image. This has created challenges for companies working to improve NIR lighting for machine vision applications. Technological breakthroughs have recently made some technological breakthroughs, solving the problem of using only thick silicon to increase photon absorption. First, the upgraded DTI method is developed using an advanced 300mm fabrication process to create a silicon oxide barrier between adjacent cells that can cause a refractive index change between oxide and silicon, thereby forming optical confinement within the same pixel. . Unlike traditional DTIs, the upgraded DTI does not widen the trenches, but deepens them, and the trenches are still narrow, which helps control photons. Secondly, an absorption structure similar to the pyramid structure in solar cell processing is realized on the surface of the wafer for fabricating a scattering optical layer. Careful implementation of the optical layer prevents defects in the dark areas of the image and further increases the path length of photons in the silicon. The shape of this structure allows the light path inside the silicon to be longer rather than straight up or down. By decomposing and scattering the light path, it can affect the length of the light path. As a result, the light reflected from the absorbing structure is reflected back and forth like a table tennis ball, increasing its absorption probability. Ensuring the accuracy of the absorption structure angle is critical to the effectiveness of the scattered light layer. If the angle is wrong, it may cause the photons to reflect to the next cell instead of returning to the original cell.

Figure 2 The shape of the absorbing structure lengthens the optical path inside the silicon, rather than straight up or down. Conclusion Through close cooperation with foundry partners, OmniVision has developed Nyxel NIR technology, which solves A performance problem that often plagues NIR development. By combining thick silicon with an upgraded DTI and using a light-scattering layer to manage surface textures, sensors using Nyxel technology can increase QE performance by a factor of three compared to OmniVision's previous generation of sensors, resulting in NIR sensitivity. 850nm, and will not cause other image quality indicators to degrade. The result is convincing. Sensors equipped with this technology can provide higher image quality in extremely low light conditions, detect images at longer distances, and reduce the input and power consumption required for operation, thus satisfying requirements such as AR/VR New requirements for advanced machine vision such as ADAS and night vision applications.

KENNEDE ELECTRONICS MFG CO.,LTD. , https://www.axavape.com